Join us on zoom here

Sign up here to receive email communications

Visit our Youtube channel for the recordings

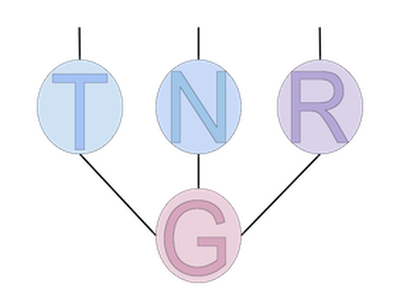

Join the TeNRead Slack channel for discussions

Upcoming Talks, Winter 2025

Past Talks, Winter 2024

Apr 23, 2024

-

Title: Multilinear Operator Networks

Presenter: Yixin Cheng

AbstractReadingDespite the remarkable capabilities of deep neural networks in image recognition, the dependence on activation functions remains a largely unexplored area and has yet to be eliminated. On the other hand, Polynomial Networks is a class of models that does not require activation functions, but have yet to perform on par with modern architectures. In this work, we aim close this gap and propose MONet, which relies solely on multilinear operators. The core layer of MONet, called Mu-Layer, captures multiplicative interactions of the elements of the input token. MONet captures high-degree interactions of the input elements and we demonstrate the efficacy of our approach on a series of image recognition and scientific computing benchmarks. The proposed model outperforms prior polynomial networks and performs on par with modern architectures. We believe that MONet can inspire further research on models that use entirely multilinear operations.

Apr 9, 2024

-

Title: Dynamic Tensor Decomposition via Neural Diffusion-Reaction Processes

Presenter: Shikai Fang

AbstractNotes Recording LinkTensor decomposition is an important tool for multiway data analysis. In practice, the data is often sparse yet associated with rich temporal information. Existing methods, however, often under-use the time information and ignore the structural knowledge within the sparsely observed tensor entries. To overcome these limitations and to better capture the underlying temporal structure, we propose Dynamic EMbedIngs fOr dynamic Tensor dEcomposition (DEMOTE). We develop a neural diffusion-reaction process to estimate dynamic embeddings for the entities in each tensor mode. Specifically, based on the observed tensor entries, we build a multipartite graph to encode the correlation between the entities. We construct a graph diffusion process to co-evolve the embedding trajectories of the correlated entities and use a neural network to construct a reaction process for each individual entity. In this way, our model can capture both the commonalities and personalities during the evolution of the embeddings for different entities. We then use a neural network to model the entry value as a nonlinear function of the embedding trajectories. For model estimation, we combine ODE solvers to develop a stochastic mini-batch learning algorithm. We propose a stratified sampling method to balance the cost of processing each mini-batch so as to improve the overall efficiency. We show the advantage of our approach in both simulation study and real-world applications.

Apr 2, 2024

-

Title: Equivariant Polynomials for Graph Neural Networks

Presenter: Bobak Kiani

Speaker BioAbstractI am a postdoc at Harvard University in the Applied Mathematics and Computer Science department. Before this, I was a PhD student at MIT studying Electrical Engineering and Computer Science. My research areas are related to machine learning and quantum computation. In quantum computation, my work focuses on learning the limits and capabilities of algorithms especially those related to quantum machine learning. On the classical side, I am interested in theoretically studying the role of geometry and symmetries in learning algorithms.Recording LinkI will discuss applications of invariant and equivariant polynomials in learning on graph data. Our work presents an alternative expressiveness hierarchy for graph neural networks (GNNs) based on the ability of GNNs to calculate equivariant polynomials of a certain degree. I will present a basis for any graph equivariant polynomial and show how this basis can enhance expressivity and performance of GNN models. This work is in collaboration with colleagues at Meta AI, Weizmann, and MIT.

Mar 26, 2024

-

Title: Scalable symmetric Tucker tensor decomposition

Presenter: Ruhui Jin

AbstractRecording LinkWe study the best low-rank Tucker decomposition of symmetric tensors. The motivating application is decomposing higher-order multivariate moments. Moment tensors have special structure and are important to various data science problems. We advocate for projected gradient descent (PGD) method and higher-order eigenvalue decomposition (HOEVD) approximation as computation schemes. Most importantly, we develop scalable adaptations of the basic PGD and HOEVD methods to decompose sample moment tensors. With the help of implicit and streaming techniques, we evade the overhead cost of building and storing the moment tensor. Such reductions make computing the Tucker decomposition realizable for large data instances in high dimensions. Numerical experiments demonstrate the efficiency of the algorithms and the applicability of moment tensor decompositions to real-world datasets. Finally we study the convergence on the Grassmannian manifold, and prove that the update sequence derived by the PGD solver achieves first- and second-order criticality

Mar 19, 2024

-

Title: When randomized algorithms meet tensor decompositions

Presenter: Beheshteh T. Rakhshan

AbstractNotes Recording LinkTensor decomposition methods have recently found numerous applications in machine learning. Their ability to perform operations efficiently on very high-dimensional tensor data makes them ubiquitous in data science and machine learning. Due to the curse of dimensionality of tensors, designing efficient algorithms for very high dimensional tensor data is challenging. On the other hand, finding a decomposition of high-dimensional tensors is crucial. Many tensor decompositions correspond to either difficult non-convex optimization problems or the running time is exponential in the order of a tensor. To address these issues, several recent works have developed randomized-based algorithms. These issues have led to the search for alternatives based on randomization and sampling techniques. In this talk, we will be giving a tutorial on randomized algorithms and their application in tensor network methods.

Mar 12, 2024

-

Title: Approximately Optimal Core Shapes for Tensor Decompositions

Presenter: Mehrdad Ghadiri

AbstractRecording LinkThis work studies the combinatorial optimization problem of finding an optimal core tensor shape, also called multilinear rank, for a size-constrained Tucker decomposition. We give an algorithm with provable approximation guarantees for its reconstruction error via connections to higher-order singular values. Specifically, we introduce a novel Tucker packing problem, which we prove is NPhard, and give a polynomial-time approximation scheme based on a reduction to the 2-dimensional knapsack problem with a matroid constraint. We also generalize our techniques to tree tensor network decompositions. We implement our algorithm using an integer programming solver, and show that its solution quality is competitive with (and sometimes better than) the greedy algorithm that uses the true Tucker decomposition loss at each step, while also running up to 1000x faster

Mar 5, 2024

-

Title: SketchySGD: Reliable Stochastic Optimization via Randomized Curvature Estimates

Presenter: Zachary Joseph Frangella

AbstractRecording LinkWe introduce SketchySGD, a stochastic quasi-Newton method that uses sketching to approximate the curvature of the loss function. SketchySGD improves upon existing stochastic gradient methods in machine learning by using randomized low-rank approximations to the subsampled Hessian and by introducing an automated stepsize that works well across a wide range of convex machine learning problems. We show theoretically that SketchySGD with a fixed stepsize converges linearly to a small ball around the optimum. Further, in the ill-conditioned setting we show SketchySGD converges at a faster rate than SGD for least-squares problems. We validate this improvement empirically with ridge regression experiments on real data. Numerical experiments on both ridge and logistic regression problems with dense and sparse data, show that SketchySGD equipped with its default hyperparameters can achieve comparable or better results than popular stochastic gradient methods, even when they have been tuned to yield their best performance. In particular, SketchySGD is able to solve an ill-conditioned logistic regression problem with a data matrix that takes more than 840GB RAM to store, while its competitors, even when tuned, are unable to make any progress. SketchySGD’s ability to work out-of-the box with its default hyperparameters and excel on illconditioned problems is an advantage over other stochastic gradient methods, most of which require careful hyperparameter tuning (especially of the learning rate) to obtain good performance and degrade in the presence of ill-conditioning.

Feb 27, 2024

-

Title: HyperAttention: Long-context Attention in Near-Linear Time

Presenter: Amir Zandieh

AbstractRecording LinkWe present an approximate attention mechanism named “HyperAttention” to address the computational challenges posed by the growing complexity of long contexts used in Large Language Models (LLMs). Recent work suggests that in the worst-case scenario, quadratic time is necessary unless the entries of the attention matrix are bounded or the matrix has low stable rank. We introduce two parameters which measure: (1) the max column norm in the normalized attention matrix, and (2) the ratio of row norms in the unnormalized attention matrix after detecting and removing large entries. We use these fine-grained parameters to capture the hardness of the problem. Despite previous lower bounds, we are able to achieve a linear time sampling algorithm even when the matrix has unbounded entries or a large stable rank, provided the above parameters are small. HyperAttention features a modular design that easily accommodates integration of other fast low-level implementations, particularly FlashAttention. Empirically, employing Locality Sensitive Hashing (LSH) to identify large entries, HyperAttention outperforms existing methods, giving significant speed improvements compared to state-of-the-art solutions like FlashAttention. We validate the empirical performance HyperAttention on a variety of different long-context length datasets. For example, HyperAttention makes the inference time of ChatGLM2 50% faster on 32k context length while perplexity increases from 5.6 to 6.3. On larger context length, e.g., 131k, with causal masking, HyperAttention offers 5-fold speedup on a single attention layer.

Feb 20, 2024

-

Title: Cost-efficient Gaussian tensor network embeddings for tensor-structured inputs

Presenter: Linjian Ma

Speaker BioAbstractLinjian Ma is currently a research scientist at Meta Platforms. Before this role, he pursued his PhD in Computer Science at University of Illinois Urbana-Champaign, advised by Edgar Solomonik. His research interests are numerical algorithms and high-performance computing. His PhD thesis focused on developing efficient systems and numerical algorithms for tensor computations with applications in data analytics and quantum simulation.Notes Recording LinkWe propose novel sketching algorithms for both tensor decompositions and tensor networks. Sketching involves employing random matrices, also known as embeddings, to project data onto low-dimensional spaces, thereby reducing the computational cost of subsequent operations. In the context of data with a tensor network structure, we present efficient algorithms that utilize tensor network-structured embeddings to sketch the data. Moreover, we provide theoretical bounds on the accuracy of sketching achieved through these algorithms. The proposed sketching techniques are used to accelerate various problems involving tensor networks, including CP and Tucker tensor decompositions and tensor train rounding.

Feb 6, 2024

-

Title: Fast Exact Leverage Score Sampling from Khatri-Rao Products with Applications to Tensor Decomposition

Presenter: Vivek Bharadwaj

Speaker BioAbstractVivek Bharadwaj is a PhD student at the University of California, Berkeley advised by James Demmel and Aydın Buluç. His interests include high-performance computing, randomized algorithms, and tensor decompositions at scale. He is affiliated with the BeBOP and PASSION groups at the university and its affiliate, Lawrence Berkeley National Laboratory.Notes Recording LinkWe present a data structure to randomly sample rows from the Khatri-Rao product of several matrices according to the exact distribution of its leverage scores. Our proposed sampler draws each row in time logarithmic in the height of the KhatriRao product and quadratic in its column count, with persistent space overhead at most the size of the input matrices. As a result, it tractably draws samples even when the matrices forming the Khatri-Rao product have tens of millions of rows each. When used to sketch the linear least squares problems arising in CANDECOMP / PARAFAC tensor decomposition, our method achieves lower asymptotic complexity per solve than recent state-of-the-art methods. Experiments on billion-scale sparse tensors validate our claims, with our algorithm achieving higher accuracy than competing methods as the decomposition rank grows.

Jan 30, 2024

-

Title: Sampling-Based Decomposition Algorithms for Arbitrary Tensor Networks

Presenter: Osman Malik

Speaker BioAbstractOsman is the 2021 Alvarez Postdoctoral Fellow at Lawrence Berkeley National Laboratory where he is a member of the Scalable Solvers Group. He received his PhD in Applied Mathematics from University of Colorado Boulder where he was advised by Stephen Becker. His research is evolving around randomized algorithms, tensor networks methods, optimization and quantum computing.Notes Recording LinkWe show how to develop sampling-based alternating least squares (ALS) algorithms for decomposition of tensors into any tensor network (TN) format. Provided the TN format satisfies certain mild assumptions, resulting algorithms will have input sublinear per-iteration cost. Unlike most previous works on sampling-based ALS methods for tensor decomposition, the sampling in our framework is done according to the exact leverage score distribution of the design matrices in the ALS subproblems. We implement and test two tensor decomposition algorithms that use our sampling framework in a feature extraction experiment where we compare them against a number of other decomposition algorithms.

Past Talks, Fall 2023

Oct 17, 2023

-

Title: Introduction to Tensor Networks

Presenter: Guillaume Rabusseau

Speaker BioAbstractGuillaume Rabusseau is an assistant professor at Univeristé de Montréal since 2018 and holds a Canada CIFAR AI chair at the Mila research institute since 2019. Prior to joining Mila, he was an IVADO postdoctoral research fellow in the Reasoning and Learning Lab at McGill University, where he worked with Prakash Panangaden, Joelle Pineau and Doina Precup. He obtained his PhD in computer science in 2016 at Aix-Marseille University under the supervision of François Denis and Hachem Kadri. His research interests lie at the intersection of theoretical computer science and machine learning, and his work revolves around exploring inter-connections between tensors and machine learning to develop efficient learning methods for structured data relying on linear and multilinear algebra, and on the tensor network formalism.Notes Recording LinkIn this talk, I will give a tutorial on tensor networks and briefly present some of their applications in Machine Learning. Tensors are high order generalization of vectors and matrices. Similar to matrix factorization techniques, one of the goal of tensor decomposition techniques is to express a tensor as a product of small factors, thus reducing the number of parameters and potentially regularizing machine learning models. While linear algebra is ubiquitous and taught in most undergrad curriculum, tensor and multilinear algebra can be daunting. In this talk, I will try to give an easy and accessible introduction to tensor methods using the tensor network formalism. Tensor networks are an intuitive diagrammatic notation allowing one to easily reason about complex operations on high-order tensors.

Oct 24, 2023

-

Title: Tensor network structure search (TN-SS)

Presenter: Chao Li

Reading AbstractNotes Recording LinkTensor networks (TN) represent a formidable framework within machine learning. However, the selection of an effective TN model—a process known as Tensor Network Structure Search (TN-SS)—remains a computationally challenging endeavor. In my presentation, I will offer a succinct overview of our approach to this issue. I will concentrate on problem formulation and solution strategies from the standpoint of discrete optimization. Specifically, I will discuss three algorithms and the associated theoretical findings, which have been the subject of my research and were published in ICML conferences in 2020, 2022, and 2023.

Oct 31, 2023

-

Title: TNS and non-equilibrium dynamics

Presenter: Mari Carmen Bañuls

AbstractNotes Reading Recording LinkTensor Network States (TNS) are ansatzes that can efficiently represent certain states of quantum many-body systems. In one spatial dimension, the paradigmatic example is the family of Matrix Product States (MPS), extremely powerful to study ground states, low energy excitations, and thermal equilibrium states. Quantum information theory provides tools to understand why TNS are good ansatzes for physically relevant states, and some of the limitations connected to the simulation algorithms. Most significantly, while to some extent TNS can be used to study real time evolution, a full description of the most general out-of-equilibrium setup is often out of reach. In this talk I will present the basic ideas behind TNS algorithms, as well as the limitations and some alternative approaches that try to push their application for dynamical problems.

Nov 7th, 2023

-

Title: MultiHU-TD: Multi-feature Hyperspectral Unmixing Based on Tensor Decomposition

Presenter: Mohamad Jouni

Notes Reading Recording Link

Nov 14, 2023

-

Title: What Makes Data Suitable for a Locally Connected Neural Network? A Necessary and Sufficient Condition Based on Quantum Entanglement

Presenter: Yotam Alexander

Notes Recording Link Reading

Nov 21, 2023

-

Title: High-dimensional density estimation with tensorizing flow

Presenter: Yinuo Ren

Notes Recording Link Reading

Nov 28, 2023

-

Title: AI and TN - a love affair

Presenters: Bram Vanhecke

Speaker BioAbstractI studied at KU Leuven where I made one publication on supergravity with A. Van Proeyen. Then did a PhD in the group of Frank Verstraete working on tensor networks, specifically PEPS methods, criticality and frustrated systems. Now I'm doing a post-doc UniWien in the group of Norbert Schuch. Current work continues in tensor networks, but branching out into some machine learning applications with Samuel Wauthier and continuous tensor networks (unpublished).Notes Recording Link Reading 1 Reading 2Machine learning and tensor networks have grown closer these past few years as they both learn and borrow from one another. We will discuss one project where tensor networks are used to construct a generative model for active inference planning (a theory in neuroscience that models human learning and cognition), hence using tensor networks to do human-inspired machine learning. And also another project where a technique developed for deep learning, automatic differentiation, is applied to a standard tensor network problem: PEPS optimization. The latter focusses on three major issues in applying AD in this new context, and presents efficient solutions. One of these has also implications for AD users who aren't using it for PEPS.

Dec 4, 2023

-

Title: Enhancing Generative Models via Quantum Correlations

Presenter: Eric R. Anschuetz

Speaker BioAbstractEric is a Sherman Fairchild postdoctoral fellow at Caltech, having recently finished his PhD under Aram Harrow at MIT. His research focuses on studying the performance of quantum machine learning algorithms: when they are useful, when they are feasible, and when they break. Previously, Eric has shown how techniques from random matrix theory and Morse theory can be used to study the loss landscapes of quantum machine learning models. Most recently, he has shown how nonuniversal quantum resources can lead to expressivity advantages over classical generative models using techniques from quantum foundations research.Notes Recording Link ReadingQuantum mechanical systems can produce probability distributions that exhibit quantum correlations which are difficult to capture using classical models. We show theoretically that such quantum correlations provide a powerful resource for generative modeling. In particular, we provide an unconditional proof of separation in expressive power between a class of widely used generative models—known as Bayesian networks—and its minimal quantum extension, which can be expressed as a class of matrix product states. We show that this expressivity enhancement is associated with quantum nonlocality and quantum contextuality. The possibility of quantum enhancement demonstrated in this work not only sheds light on the design of useful quantum machine-learning protocols but also provides inspiration to draw on ideas from quantum foundations to improve purely classical algorithms. We also briefly discuss more recent results demonstrating a similar separation between classical sequence models (including encoder-decoder models such as Transformers) and a class of quantized recurrent neural networks.